ZalCG computational performance

This page discusses the computational performance of the ZalCG solver. The timings demonstrate that there is no significant scalability bottleneck in computational performance.

Strong scaling of computation

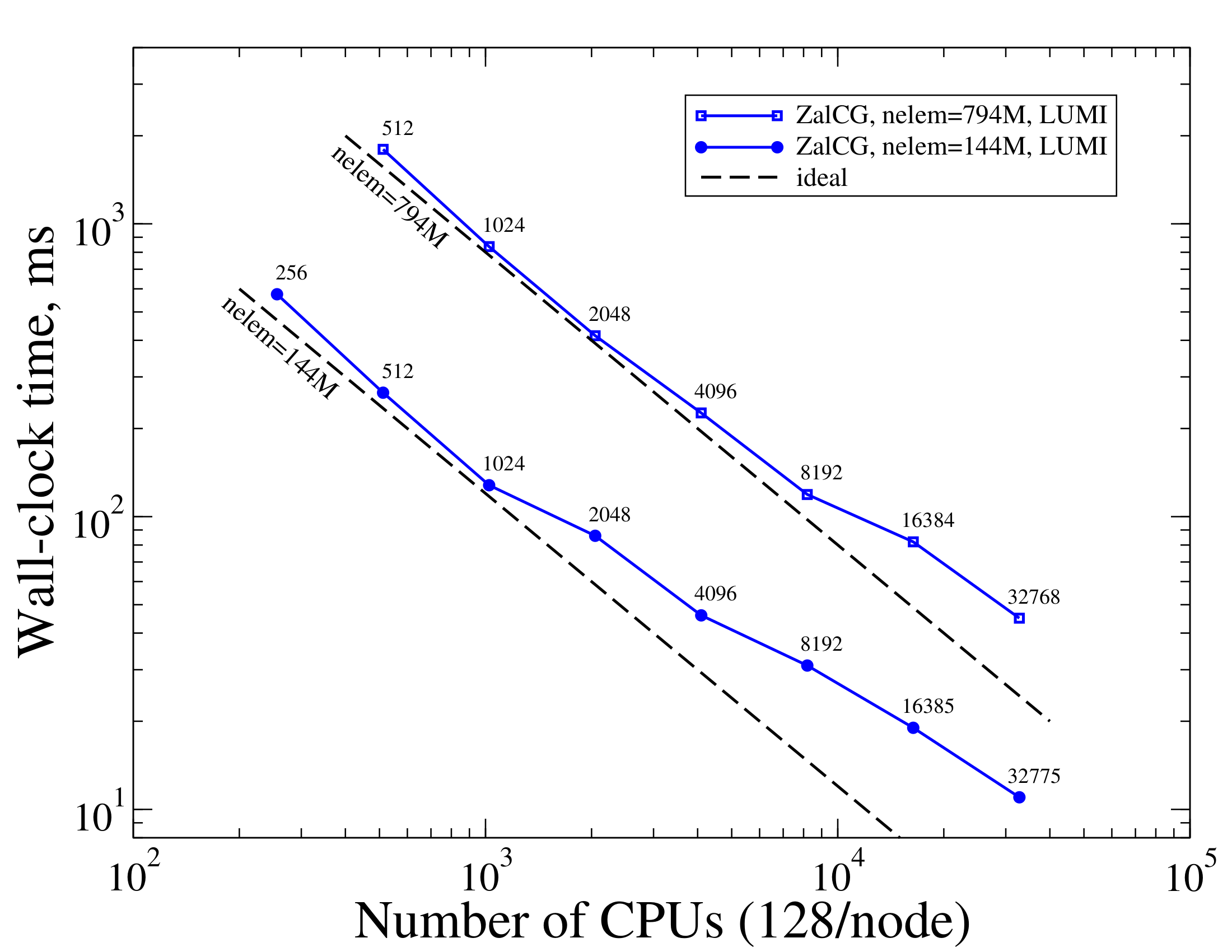

Using increasing number of compute cores with the same problem measures strong scaling, characteristic of the algorithm and its parallel implementation. Strong scalability helps answer questions, such as How much faster one can obtain a given result (at a given level of numerical error) if larger computational resources were available. To measure strong scaling we ran the Taylor-Green problem using a 794M-cell and a 144M-cell mesh on varying number of CPUs for a few time steps and measured the average wall-clock time it takes to advance a single time step. The figure below depicts timings measured for both meshes on the LUMI computer.

The figure shows that the ZalCG solver, while not ideal for all runs, scales well into the range of O(10^4) CPU cores. In particular, the figure shows that strong scaling is ideal at and below 1024 CPUs using the smaller work-load of 144M cells and for the larger mesh at and below approximately 8912 CPUs. The departure from ideal is indicated by nonzero angles between the ideal and the blue lines. The data also shows that though non-ideal above these points, parallelism is still effective in reducing CPU time with increasing compute resources for both problem sizes. Even at the largest runs time-to-solution still largely decreases with increasing resources.

As usual with strong scaling, as more processors are used with the same-size problem, communication will eventually overwhelm useful computation and the simulation does not get any faster with more resources. The above figure shows that this point has not yet been reached at approximately 32K CPUs for neither of these two mesh sizes on this machine. The point of diminishing returns is determined by the scalability of the algorithm, its implementation, the problem size, the efficiency of the underlying runtime system, the hardware (e.g., the network interconnect), and their configuration.

Strong scaling of computation – second series

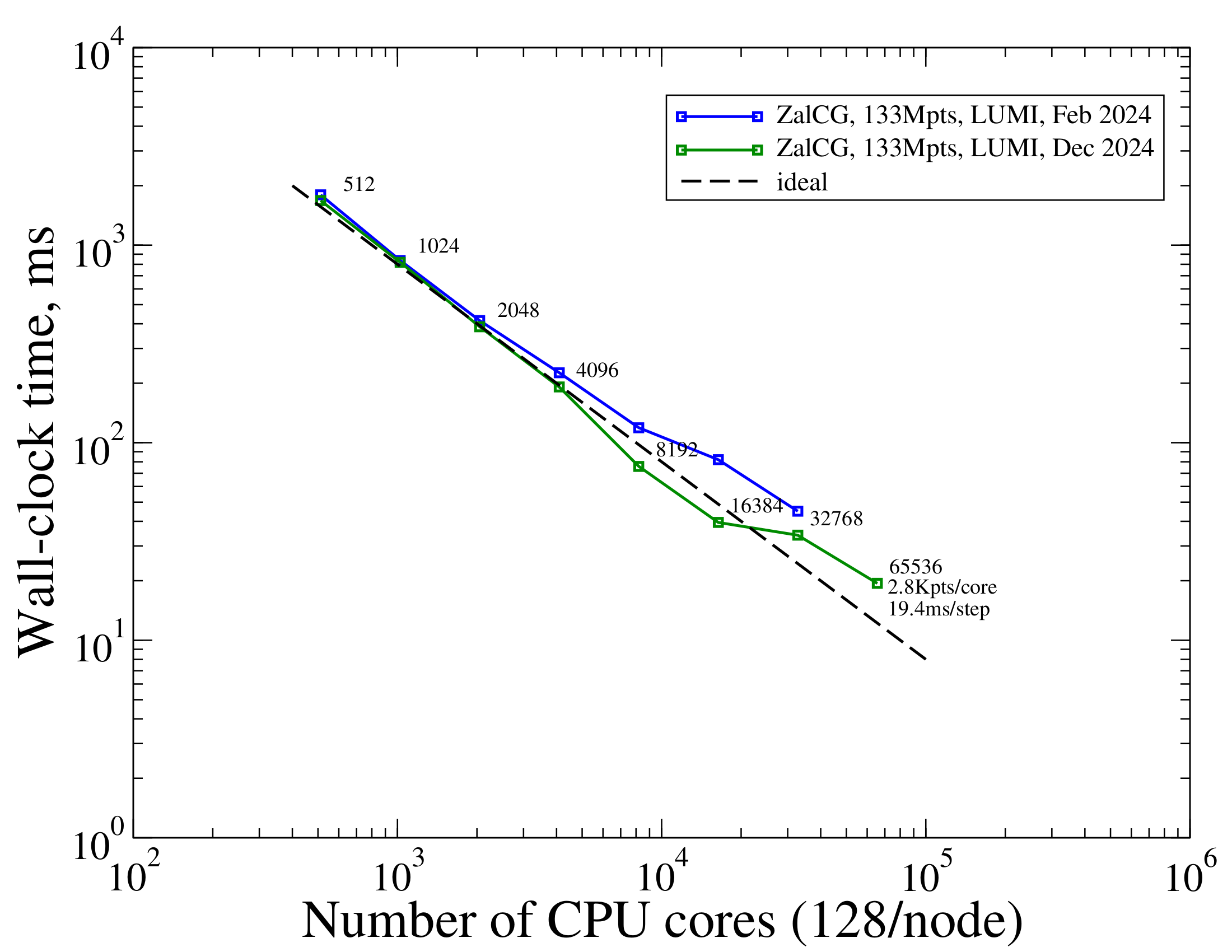

Approximately 10 months after the above data, the same benchmark series has been rerun on the same machine, using the same code computing the same problem (nelem=794M, npoin=133M) using the same software configuration. The results are depicted below.

This figure shows that though strong scaling is not ideal, using larger number of CPUs still significantly improves runtimes up to and including the largest run employing 65,536 CPU cores. Considering the mesh with 794,029,446 tetrahedra connecting 133,375,577 points, this corresponds to lower than 3K mesh points per CPU core on average. Advancing a single (one-stage) time step takes about 20 milliseconds of wall-clock time on average with this mesh on 65K CPU cores.

Comparing the 133Mpts series in the two figures also reveals that they differ around and above approximately 16K CPUs. We believe this may be due to different configurations of hardware, operating system, the network interconnect and/or it could also be due to different background loads between the two series.